What Are the Risks of Using AI for Medical Second Opinions

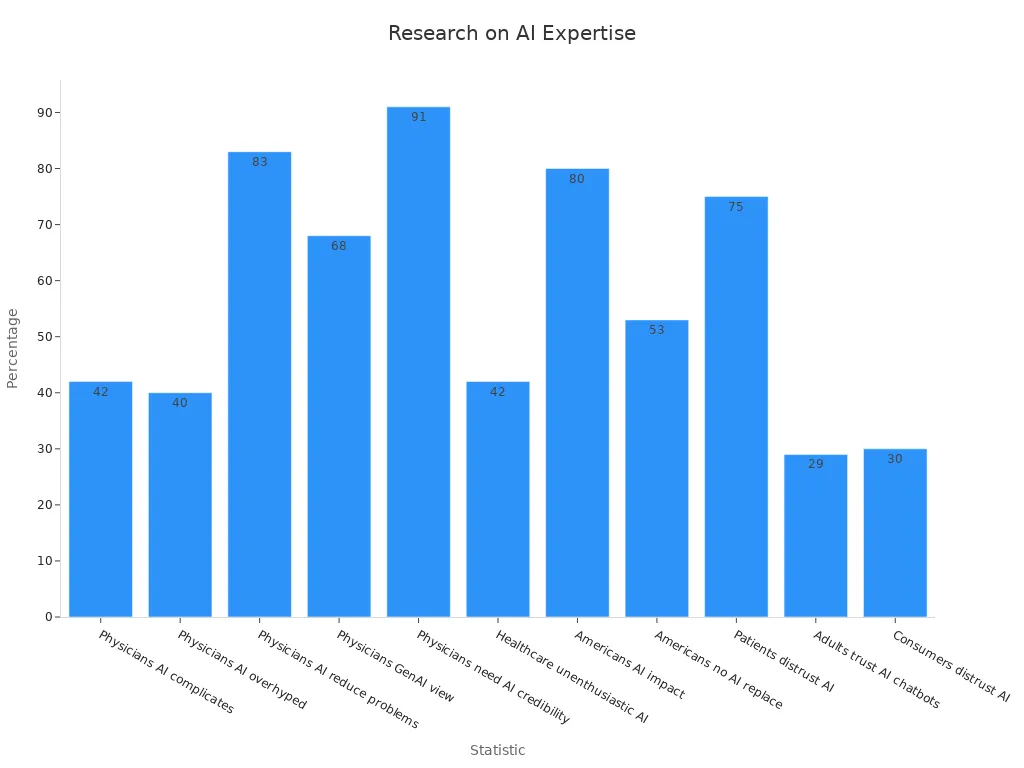

AI is transforming healthcare by offering innovative ways to obtain second opinions. However, using AI raises significant ethical concerns. For instance, 75% of U.S. patients report a lack of trust in AI within healthcare, highlighting the importance of transparent and trustworthy AI systems. While 80% of Americans believe AI can improve healthcare, 42% of doctors worry it may complicate patient care. These statistics underscore the ethical and social implications of AI. If you're considering AI for a second opinion on medical matters, understanding these risks can help you make informed decisions. Try using AI tools for a second opinion on medical matters! Explore platforms like GlobalGPT, which prioritize ethics and cutting-edge innovation.

Key Takeaways

AI can help in healthcare, but it has risks. Always check if AI systems are trustworthy before using them for medical advice.

AI's success depends on its training data. Make sure the data is recent and includes different groups to avoid wrong advice.

AI bias can cause unfair care. Use AI tools that treat all patients fairly.

Keeping patient information private is very important. Check how AI platforms store and use your health data to keep it safe.

AI should help doctors, not replace them. Always talk to a doctor along with using AI for the best results.

Accuracy and Reliability in AI-Assisted Medical Opinions

Possible Mistakes in Diagnoses

Limits of AI Training Data

AI depends on the data it learns from. If the data is old or not diverse, AI might give wrong medical advice. For example, an AI trained on one group of people may not work well for others. This can cause mistakes, especially with rare or tricky health problems. Always check where the AI gets its data before trusting it.

Risks of Wrong Medical Advice

Wrong advice can harm your health. It might delay treatment or cause unnecessary tests. AI accuracy changes based on how it handles uncertain answers. If unsure answers are counted as mistakes, accuracy drops to 72.9%. Ignoring unsure answers raises accuracy to 84.5%. This shows why doctors and AI should work together. Combining human and AI knowledge often leads to better results.

Problems in Testing AI Tools

No Standard Testing Rules

Testing AI tools in healthcare is hard without clear rules. Different systems are tested in different ways, making it tough to compare them. Without set standards, trusting AI medical advice becomes tricky. Strong testing is needed before using AI for health decisions.

Challenges in Real-Life Use

AI works well in labs but struggles in real-world healthcare. Real-life cases involve different patients, symptoms, and histories. Testing AI for these situations is hard. This means AI might not always give reliable advice. Always ask a doctor along with using AI for medical opinions. Platforms like GlobalGPT focus on solving these problems with ethical solutions.

Ethical Implications of AI Bias in Healthcare

Sources of Bias in AI Systems

Historical Inequalities in Training Data

AI learns from the data it is given. If the data has unfair patterns, the AI might repeat them. For example, if certain groups are missing in past health records, the AI may not give them good advice. This can lead to unfair treatment and bigger health gaps. Always ask if an AI tool uses fair and diverse data.

Algorithmic Bias Affecting Medical Decisions

Bias in AI design can favor some groups over others. In healthcare, this can cause harm. For example, an AI tool might focus on saving money instead of helping patients. One study showed Black patients needing more care got the same attention as healthier White patients. This shows how bias can hurt fairness and lead to bad results for some groups.

Impact on Medical Outcomes

Disparities in Care for Underrepresented Groups

AI bias can make health gaps worse. Some groups might get wrong advice or poor treatment. This can delay care and harm their health. Fixing this means making healthcare fair for everyone. Use AI tools for second opinions, but check if they follow fair rules.

Ethical Considerations in Addressing Bias

Fixing AI bias needs ethical thinking. Developers should make fair systems that reduce gaps. This means using fair data, testing for bias, and being open about how AI works. Ethical rules should guide AI use to protect at-risk groups. Platforms like GlobalGPT work on these issues with fairness and new ideas.

Tip: Always talk to a doctor when using AI tools for medical advice.

Privacy and Data Security in AI-Driven Healthcare

Risks to Patient Privacy

Weaknesses in AI Data Storage

AI needs a lot of patient data to work. However, storing this data can be risky. Many healthcare systems struggle to keep data safe from hackers. Some algorithms can uncover hidden details in anonymized data. This could reveal private health information. For example, a 2018 study found only 11% of Americans trusted tech companies with their health data. Meanwhile, 72% preferred sharing it with doctors. This shows people worry about AI systems protecting their privacy. Always check how your data is stored and secured when using AI tools.

Risk Type | Description |

|---|---|

Worries about who can use and control patient data. | |

Reidentification of Anonymized Data | Algorithms might uncover identities from anonymized data, risking privacy. |

Privacy Breaches | More healthcare data breaches are happening due to advanced AI tools. |

Misuse of Private Health Information

Using patient data wrongly is a big problem. For instance, the Royal Free London NHS Foundation Trust shared patient records without permission. This shows how data can be shared without patients knowing. It also harms trust and patient rights. Always choose platforms that are clear about how they use your data. GlobalGPT is an example of a platform that focuses on ethical AI and responsible data use.

Ethical Use of Patient Data

Why Informed Consent Matters

You should know how your health data is used. Patients need to give clear permission before sharing their data with AI systems. But some AI tools use data without asking first. This breaks trust and puts privacy at risk. Pick platforms that respect your choices and explain their data use clearly. Ethical AI depends on honesty and open communication.

Protecting Privacy While Innovating

AI can improve healthcare, but it must respect privacy. Data should be handled carefully to protect patient rights. Rules and public values should guide how data is used. Look for AI tools that combine innovation with ethical practices. Platforms like GlobalGPT aim to protect privacy while using advanced technology.

Tip: Use AI tools for medical advice, but always check their privacy policies! Platforms like GlobalGPT focus on both innovation and protecting your data.

Enjoy Proofreading in Just One Minute

Accountability and Ethical Oversight in AI Systems

Figuring Out Who is Responsible for AI Mistakes

Problems with Blaming AI Errors

When AI makes mistakes, it’s hard to know who’s at fault. Is it the developers, the doctors, or the AI itself? Unlike people, AI decisions come from complex systems that are hard to understand. This makes it tricky to decide who is responsible. For example, if an AI gives the wrong diagnosis, should the hospital or the company that made the AI be blamed? These issues show why we need clear rules to make AI fair and equal in healthcare.

Effects of AI Mistakes on Patients and Doctors

AI errors can hurt patients and healthcare workers. A wrong diagnosis might delay treatment or cause unnecessary tests. This can harm your health and trust in doctors. Laws haven’t caught up with fast-growing AI, leaving gaps in responsibility. Ethical rules are needed to guide how AI mistakes are handled. Developers and hospitals must work together to fix errors fairly. By being open and careful, AI tools can be used safely. Platforms like GlobalGPT work to solve these problems with ethical solutions.

Rules and Ethics for AI in Healthcare

Why Clear Rules Are Important for AI in Medicine

Without clear rules, trusting AI in healthcare can feel risky. Guidelines are needed to make sure AI is safe and fair. Important rules include:

Data Privacy and Security: Keeping patient data safe and private.

FDA Approval Processes: Proving AI tools are safe and work well.

Algorithmic Bias and Fairness: Checking for bias and using fair data.

Transparency and Explainability: Explaining how AI makes decisions.

These rules help people trust AI and make healthcare fair for everyone.

How Institutions Can Keep AI Accountable

Hospitals, regulators, and developers must work together to make AI ethical. They should focus on getting patient permission, respecting choices, and reducing unfairness. Institutions also need to watch how AI affects patients and doctors. By following ethical practices, they can handle AI’s challenges and make healthcare better for all. Try using AI tools for medical advice! Platforms like GlobalGPT focus on fairness and new ideas to help you make safe choices.

Risks of Over-reliance on AI in Medical Decisions

Weakening Human Skills

How critical thinking can suffer in healthcare

Depending too much on AI might harm doctors' thinking skills. If doctors rely on AI for diagnoses, they may lose problem-solving abilities. A study found 53% of Americans think AI cannot replace human skills. This shows why strong human judgment is still needed in healthcare. Overusing AI could make it harder for doctors to handle rare or tough cases.

Why human review is important in AI decisions

Doctors must check AI suggestions to avoid mistakes. AI can misread symptoms or miss details about a patient’s history. These errors happen because of bias or limited training data. A doctor should always review what AI recommends. Combining human knowledge with AI tools can lower mistakes and improve care. Platforms like GlobalGPT focus on ethical AI, ensuring doctors oversee AI use.

Mixing AI with Human Judgment

Making AI support human skills

AI should help doctors, not replace them. Studies show that using AI with human judgment improves accuracy. For example, doctors using AI during procedures can make better decisions. This teamwork ensures AI helps doctors instead of taking over their role. You can trust AI more when it works with skilled experts to provide safe care.

Teaching doctors to use AI

Doctors need training to work well with AI. Without knowing how AI works, they might not spot errors or understand its advice. Training should teach doctors to use AI while staying ethical. This helps ensure AI benefits all patients equally. Platforms like GlobalGPT offer tools that focus on fairness and ethics, helping doctors use AI responsibly.

Note: Relying too much on AI can harm fairness and ethics. Always ask a doctor when using AI for medical advice.

Description | |

|---|---|

Algorithmic Bias | AI might give unfair results based on gender or ethnicity. |

Lack of Transparency | Hard-to-understand AI decisions can confuse patients and doctors. |

Data Privacy Concerns | Using AI more often raises worries about keeping data safe. |

Accountability Issues | It’s unclear who is responsible when AI makes mistakes. |

AI can change healthcare by improving second opinions. But it has challenges too. Problems like bias, privacy, and responsibility are important. Clear and honest AI systems help people trust them. Studies show that unfair algorithms and privacy risks need fixing for AI to work well in healthcare. Doctors also use other clues to decide if AI is trustworthy.

Using AI with human skills leads to better care. Ethical rules and teamwork between creators and doctors can reduce problems. Tools like GlobalGPT focus on fair AI, helping you stay safe and make smart choices.

Enjoy GlobalGPT in Just One Minute

FAQ

What does AI do for second opinions in healthcare?

AI looks at medical data to give extra help with diagnoses. It finds patterns and suggests ideas to doctors. But, always talk to a doctor to confirm what AI says. Platforms like GlobalGPT mix new technology with fairness to improve your care.

Can AI take the place of doctors?

AI cannot replace doctors. It works fast with big data but lacks human care and judgment. Doctors use AI to make better choices. Think of AI as a helper, not a replacement, for expert medical advice.

How does AI keep my health data private?

AI uses tools like encryption to protect your health data. Still, data leaks can happen. Pick platforms like GlobalGPT that focus on safe and honest data use. Knowing how your data is handled helps you stay informed.

Is AI good at giving medical second opinions?

AI can be very accurate, but it depends on its training data. It might have trouble with rare or hard-to-spot conditions. Use AI advice along with a doctor’s opinion for the best results. GlobalGPT works on making AI fair and reliable.

How can I use AI safely for health advice?

Check if the AI tool is trustworthy and always ask a doctor too. Choose platforms like GlobalGPT that care about fairness and privacy. Learn how the AI works and know its limits to stay safe.

Tip: Try AI tools like GlobalGPT for second opinions, but always include a doctor in your final decision.